Generative Artificial Intelligence: Humans are good at analyzing things. However, it now seems that machines might be able to do it better. Machines can tirelessly analyze data around the clock, continuously finding patterns in various human scenarios: credit card fraud alerts, spam detection, stock price predictions, and personalized recommendations for products and videos, among others. They are becoming increasingly smarter at these tasks. This is called “Analytical AI” or “Traditional AI.”

However, humans are not just good at analyzing; we are also good at creating. We write poems, design products, make games, and write code. Until 2022, machines had no chance to compete with humans in creative work; they could only handle analytical and rote cognitive tasks. But now (yes, right now), machines have started to attempt to surpass humans in creating beautiful and emotional things. This new category is called “Generative AI (GenAI).” This means that machine learning has started to create entirely new things rather than just analyzing existing ones.

Generative AI (GenAI) will not only become faster and cheaper but, in some cases, better than human-made creations. Every industry that requires human creativity—from social media to gaming, advertising to architecture, coding to graphic design, product design to law, marketing to sales—awaits a complete transformation. Some functions may be completely replaced by GenAI, or it may inspire new ideas beyond human imagination.

Generative Artificial Intelligence Transformer: A New World

As someone who has experienced various market cycles, I have witnessed the ups and downs of different eras, such as the communications industry, IT industry, and mobile internet industry. Therefore, facing the already starting AI era, instead of blindly jumping in, it’s better to first understand some of the fundamental logic of this new cycle, like the knowledge base.

If TCP/IP, HTML, and other knowledge structures were the foundation of the previous era, then facing the GenAI era, we should ask ourselves: “What is the knowledge base of the GenAI era?” Based on the development of AI so far, this knowledge base might be the Transformer.

What is Generative Artificial Intelligence (GenAI)?

1. Overview of Transformer

Welcome to the new world of Transformers. In the past five years, many exciting changes have occurred in the AI world, driven by a paper called “Attention is All You Need,” published in 2017. This paper introduced a new architecture called the Transformer.

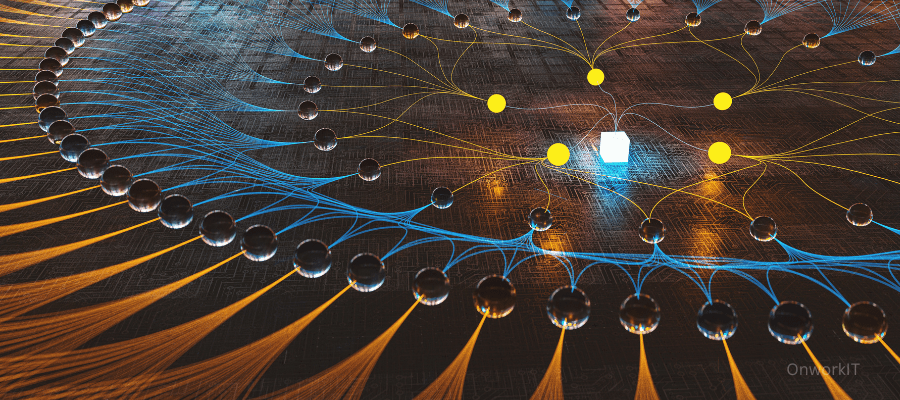

Transformers made two major contributions to machine learning. First, they improved the efficiency of using parallel computing in AI. Second, they introduced the concept of “Attention,” allowing AI to understand relationships between words. Technologies like GPT-3, BERT, and Stable Diffusion are results of the Transformer architecture’s evolution in different fields.

2. Attention Mechanism

What is the attention mechanism? According to the paper, the attention function maps a query and a set of key-value pairs to an output, where the query, keys, values, and output are vectors. The output is computed as a weighted sum of the values, with the weights determined by the compatibility of the query with the corresponding key. Transformers use multi-head attention, a parallel calculation of a specific attention function called scaled dot-product attention.

A more understandable definition from Wikipedia states: “Attention is a technique in artificial neural networks that mimics cognitive attention. This mechanism enhances the weight of certain parts of the input data while diminishing the weight of others, focusing the network’s attention on the most important parts of the data, depending on the context.”

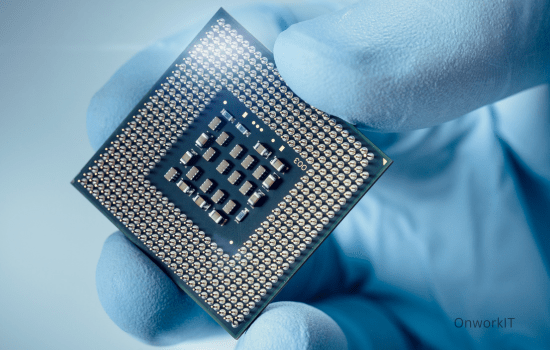

3. Transformer in Chips

Many AI experts believe that the Transformer architecture will not change much in the next five years. That’s why chip manufacturers, like NVIDIA with its H100 chip, are integrating Transformer Engines, AI Chip Innovation Transforming Technology World.

At re2022 in Las Vegas, NVIDIA architects discussed how to use their new chips on AWS for deep learning training, emphasizing the design and purpose of the Transformer Engine in the H100 chip.

4. Evolution Timeline of Transformers

An interesting perspective is arranging various Transformers in chronological order. Some significant papers that lead this GenAI wave include:

- CLIP paper in 2021;

- Stable Diffusion and DALL-E-2 in 2022;

- GPT-3.5, ChatGPT, and Bloom in late 2022.

The evolution of this new world has just begun, and there is still plenty of time to learn about Transformers!

Generative Artificial Intelligence (GenAI)

1. Why Now?

Generative AI and broader AI share the question: “Why now?” The answer includes:

- Better models;

- More data;

- More computing power.

The evolution of GenAI is much faster than we imagined. To understand the current moment, it’s valuable to look at the history and paths AI has taken.

First Wave: Dominance of Small Models (Before 2015)

Small models were considered state-of-the-art in language understanding, excelling in analytical tasks from delivery time predictions to fraud classification. However, they lacked the ability to perform general generative tasks like human-level writing or coding.

Second Wave: Scale Race (2015-Present)

The milestone paper “Attention is All You Need” in 2017 described a new neural network architecture for natural language understanding called the Transformer. This architecture could generate high-quality language models, was more parallelizable, and required less training time.

As models grew larger, they started to outperform humans in handwriting, speech and image recognition, reading comprehension, and language understanding. The artificial integence GPT-4 model, for example, showed significant improvements over GPT-2.

Despite progress in foundational research, these models were not widely used due to their size and complexity. Access was limited, and using them was costly. However, early GenAI applications began to emerge.

Third Wave: Better, Faster, Cheaper (Post-2022)

Cloud technology companies like AWS have made machine learning computation more affordable. New technologies, like diffusion models, reduced the cost of training and inference, allowing further development of better algorithms and larger models. Access to these models expanded from closed to open beta and, in some cases, open-source.

Fourth Wave: Emergence of Killer Applications (Now)

With the foundation solidifying, models getting better/faster/cheaper, and access becoming more open, the stage is set for an explosion of creativity at the application layer. Just like the mobile internet explosion a decade ago, new types of GenAI applications are expected to emerge.

2. GenAI: Application Layer Blueprint

The following diagram describes the application landscape of GenAI, showing the platform layer that supports each category and the potential types of applications that will be built on top of it.

The Fastest Growing Fields

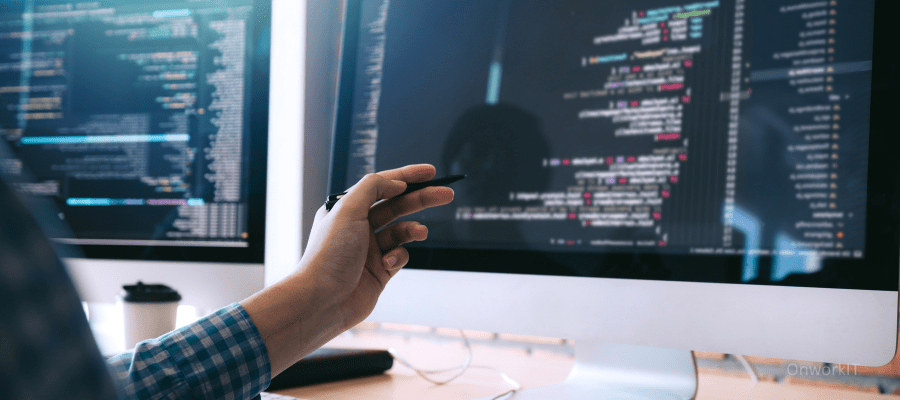

Code Generation: Code generation might significantly impact developer productivity in the short term, as demonstrated by Amazon CodeWhisperer.

Images: Image generation is a newer phenomenon. We’ve seen the emergence of different styles of image models and various techniques for editing and modifying generated images.

Speech Synthesis: Speech synthesis has been around for a while (e.g., “Hey Siri!”). Like images, today’s models provide a starting point for further refinement.

Videos and 3D Models: Video and 3D model generation are rapidly advancing. People are excited about the potential of these models to open up large creative markets, such as movies, games, virtual reality, and physical product design.

Other Fields: From audio and music to biology and chemistry, many fields are developing foundational models.

The diagram below shows how we expect foundational models to progress and the timeline for related applications becoming feasible.

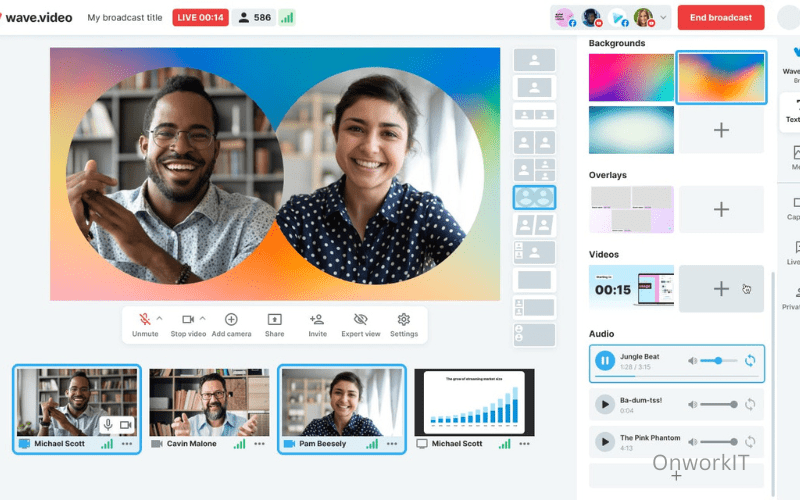

Generative Artificial Intelligence (GenAI): Text-to-Image Direction

Looking back over the past year, two AIGC directions have made remarkable progress. One of these is the text-to-image direction. According to Amazon Web Services’ official blog, wave.vedio, users can now easily use the Stable Diffusion model in SageMaker JumpStart to generate imaginative artworks.

Response to the inputs “a photo of an astronaut riding a horse on Mars,” “a painting of New York City in impressionist style,” and “dog in a suit.”

We will have more in-depth discussions on the text-to-image direction, including paper interpretations and example code, in our machine learning and other special topics.

This is part of the introduction to Transformers and Generative Aritificial Intellgence. In the next article, we will discuss another important direction in Generative AI: text generation. We will share the latest progress in this field and Amazon Web Services’ advancements and contributions in optimizing compilers, distributed training, and supporting these large language models (LLMs).

Stay tuned for more technical shares and cloud development updates for developers!

Author: Huang Haowen Huang Haowen is a senior developer advocate at Amazon Web Services, focusing on AI/ML and data science. With over 20 years of experience in telecommunications, mobile internet, and cloud computing industry architecture design, technology, and entrepreneurial management, he has previously worked at Microsoft, Sun Microsystems, and China Telecom. He specializes in providing AI/ML, data analytics, and enterprise digital transformation consulting services to clients in gaming, e-commerce, media, and advertising.